Concept

Model Context Protocol (MCP)

The Model Context Protocol (MCP) is an open, lightweight communication protocol that standardizes how applications provide context to large language models (LLMs). MCP enables the development of agents and complex workflows on top of LLMs and connects models with various data sources and tools.

Key Benefits

- A standardized, open protocol for connecting language models to tools, apps, and data sources

- Easy integration and interoperability across AI applications and platforms

- Simplified, consistent user experiences across different AI interfaces

- Flexibility to switch between apps while preserving context

- Reduced fragmentation and faster innovation across the AI ecosystem

Essentially, MCP acts as a universal language that allows an AI agent to communicate with a wide range of external resources without needing a unique integration for each one. It ensures a consistent and reliable flow of data between the agent and the tools it uses.

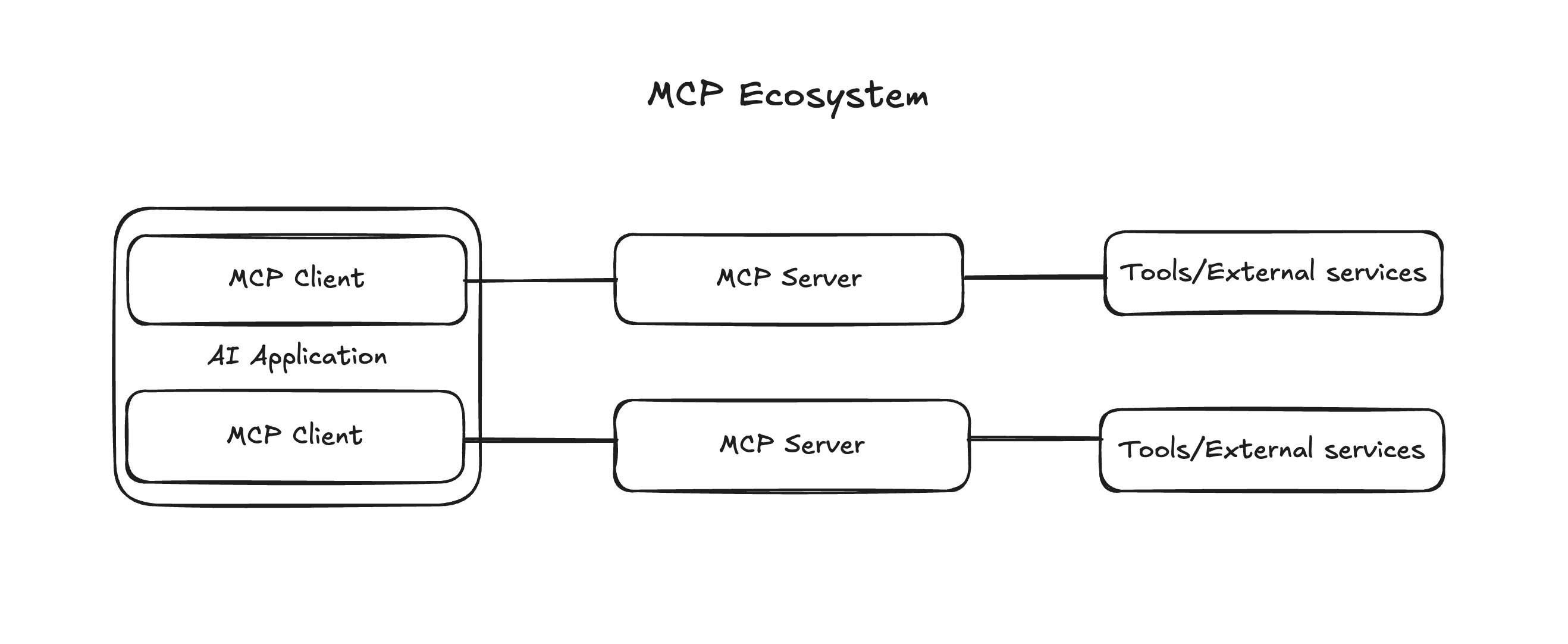

MCP Ecosystem

The MCP architecture consists of several key components that work together to enable seamless integration:

1. MCP Clients

In an AI application (e.g., Claude Desktop, AI IDEs), the Client component manages user interactions and coordinates tool usage, handling communication with a specific MCP Server.

- The Client manages user interactions, permissions, and the overall experience.

- It initiates connections to MCP Servers when external tools or data are needed.

- Embedded within the Host, it handles the low-level protocol details of MCP.

- Maintains a 1:1 connection with an MCP Server and bridges Host logic with external services.

Examples of MCP Clients

- AI Chat apps like OpenAI ChatGPT, Anthropic’s Claude Desktop

- AI-enhanced IDEs like Cursor, or tools like Continue.dev

- Custom AI agents and applications built with LangChain, smolagents, etc.

2. MCP Server

The MCP Server is an external program or service that exposes capabilities to AI models via the MCP protocol. It acts as a central hub for two-way communication between AI agents and MCP-compatible tools.

Server Capabilities

- Provide access to external tools, data sources, or services

- Act as lightweight wrappers around existing functionality

- Can run locally or remotely

- Expose capabilities in a standardized format for Clients to discover and use

3. MCP Tools

MCP Tools are executable functions or actions that an AI model can invoke through the MCP protocol. They allow models to:

- Query databases

- Call APIs

- Perform computations

Requirements for MCP Tools

- Must follow the MCP specification

- Provide a defined interface understandable by the MCP Server

- Use structured input/output formats

- Include clear documentation for purpose and parameters

Examples

- A tool to fetch real-time weather data

- A tool to generate an image from a text prompt

- A tool to look up customer data in a CRM system

AI Agents

An AI Agent is a system that uses an LLM to autonomously perform tasks by designing workflows with available tools.

Core Capabilities

- Reason: Understand user intent or task requirements

- Plan: Identify required tools to complete the task

- Act: Invoke tools via MCP

- Reflect: Analyze output to form final responses or take further action

In DronaHQ, AI Agents can automate workflows, respond to user inputs, and make data-driven decisions using MCP Tools to build production-ready apps aligned with company standards.

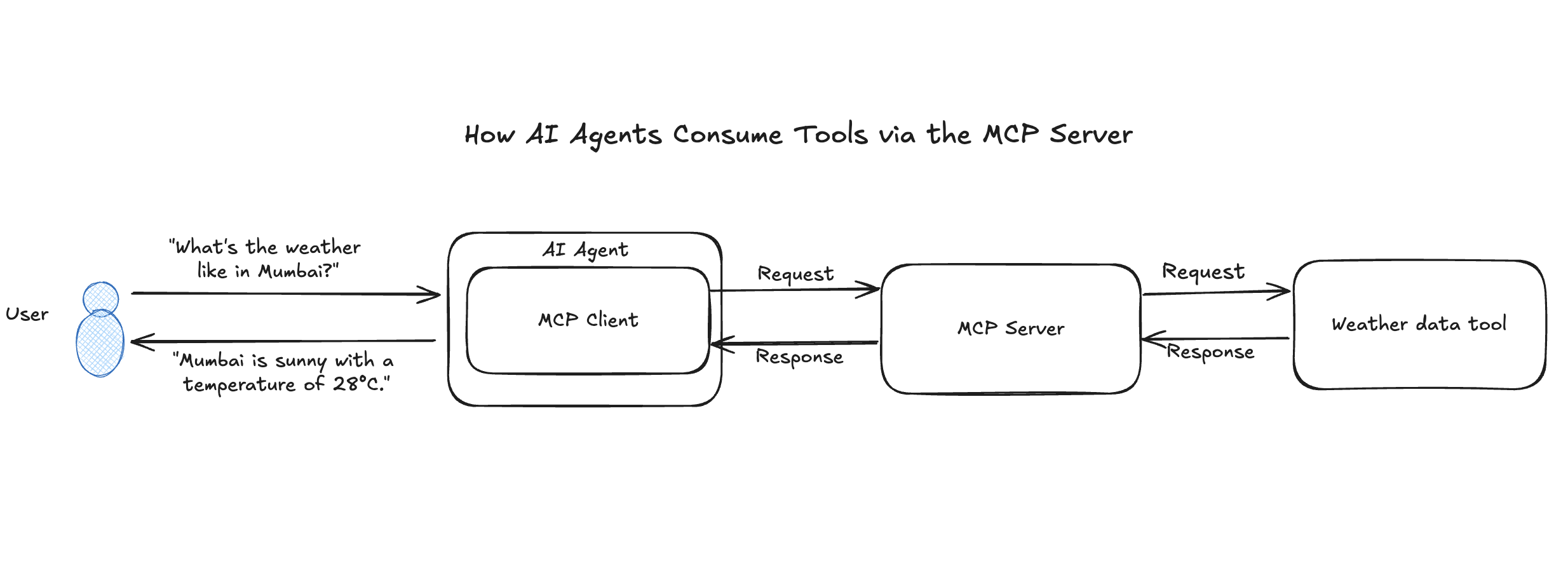

How AI Agents Consume Tools via the MCP Server

The AI agent's interaction with MCP Tools follows a multi-step process:

- User Prompt: A user asks something like “What’s the weather like in Mumbai?”

- Agent Reasoning: Determines it needs a weather data tool.

- Tool Request: Sends an MCP-compliant request with the city name to the MCP Server.

- Server Routing: The server forwards the request to the appropriate tool.

- Tool Execution: The tool fetches weather data (e.g., via API).

- Response to Agent: Server sends tool output back to the agent.

- Final Response: Agent returns a human-readable response like: “The current weather in Mumbai is sunny with a temperature of 28°C.”

Best Practices

To build robust and efficient applications using the AI Agent control, follow these guidelines:

- Define Clear Tool Interfaces: Tools should have specific, well-scoped functions.

- Use Descriptive Tool Names: Ensure names and descriptions are easily understandable.

- Implement Error Handling: Build in feedback mechanisms for failure cases.

- Optimize for Performance: Minimize response times for better UX.

- Secure Your Tools: Restrict access to sensitive APIs using MCP Server security features.

- Iterate and Refine: Continuously test and improve tool definitions and descriptions for better agent performance.